By: Chris Bogdon

Amir Degani is an assistant professor at Technion Institute of Technology and Avi Kahnani is the CEO and Co-Founder of Israeli robotics start-up Fresh Fruits Robotics. Together, they are developing an apple harvesting robot that can autonomously navigate apple orchards and accurately pick fruit from the trees. I got the chance to sit down with Amir and Avi to learn more about the project. In our talk, they discussed the robot’s design, the challenges of apple picking, tree training and their experience demoing the robot for Microsoft’s CEO at the Think Next 2016 exhibition.

Tell me a little bit about CEAR Lab.

AD: I founded the CEAR Lab about four years ago after finishing my PhD and post doc at Carnegie Mellon University in Pittsburgh. I came back to the Technion in Israel and started the Civil, Environmental, Agricultural Robotics lab in the Faculty of Civil and Environmental Engineering. We work on soft robots, dynamic robots, and optimization of manipulators, mostly with civil applications, a lot of them related to agriculture. Other applications are search and rescue, automation in construction and environmental related work as well. But, generally, we’re mostly focused on agriculture robotics and robotic systems in the open field.

How did the apple harvesting robot project come to fruition?

AD: One of my PhD students is doing more theoretical work on task-based design of manipulators. Because cost is very important in agriculture, we’re trying to reduce price and find the optimal robot to do specific tasks. We’re actually seeing that different tasks, although they look very similar to us- apple picking or orange picking or peach picking – are actually very different if you look at the robot’s kinematics. We might need different joints, different lengths and so on. So we’re collecting data and modeling trees, we’re doing optimization and we’re finding the optimal robot for a specific task. This is something we have been working on for a few years. As part of this work, we are not only designing the optimal robot, but are also looking at designing the tree – finding the optimal tree in order to further simplify the robot.

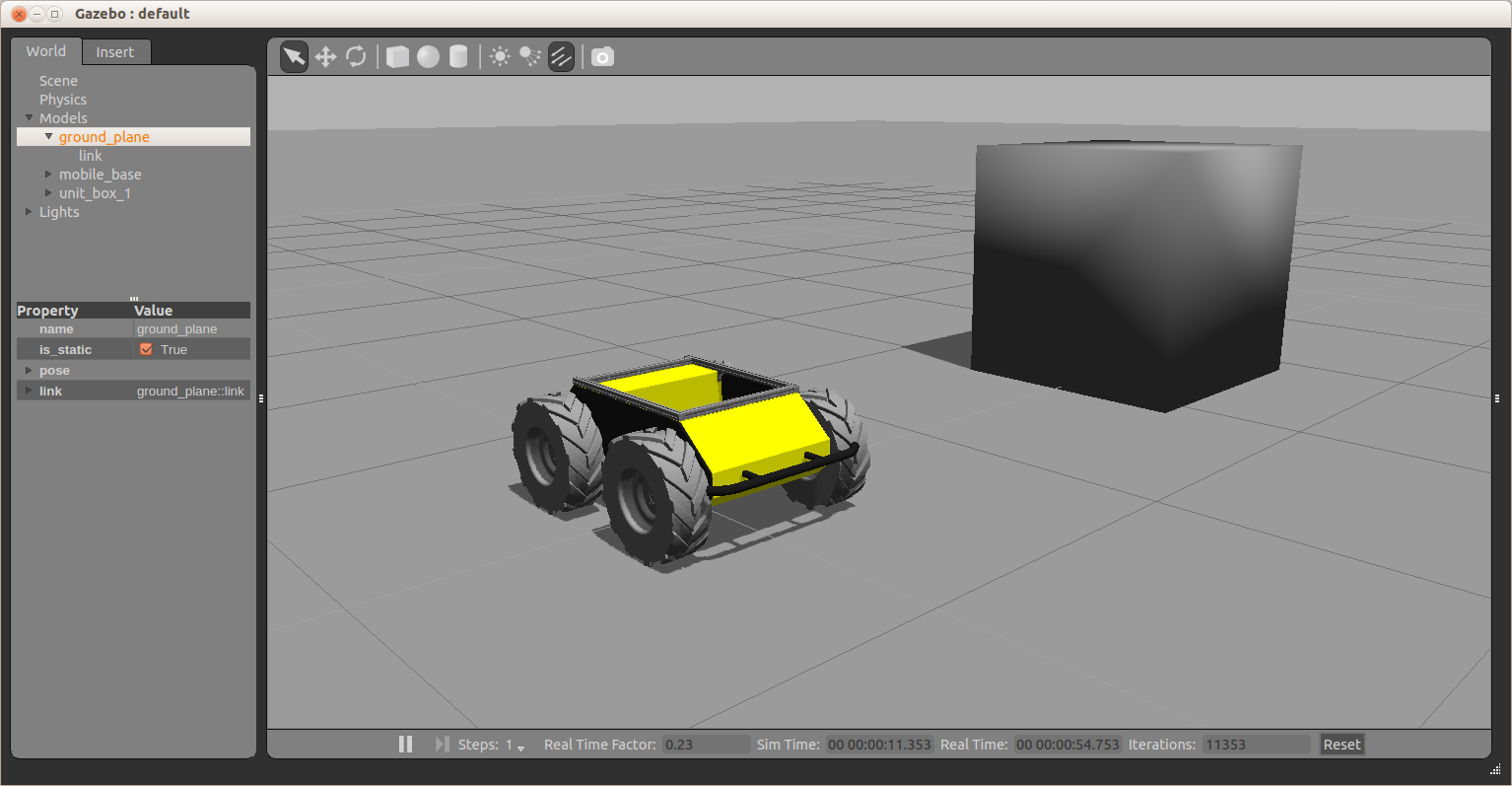

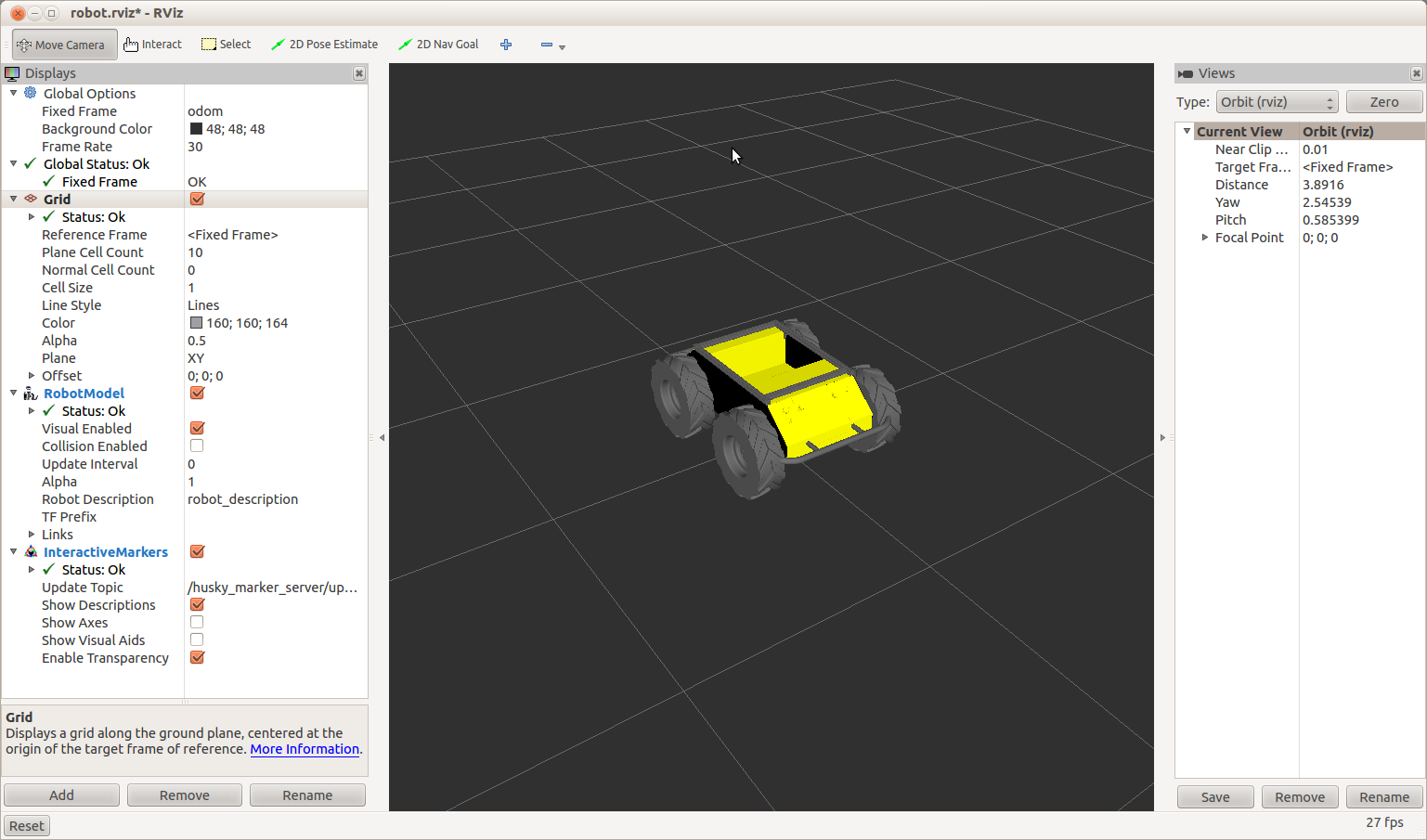

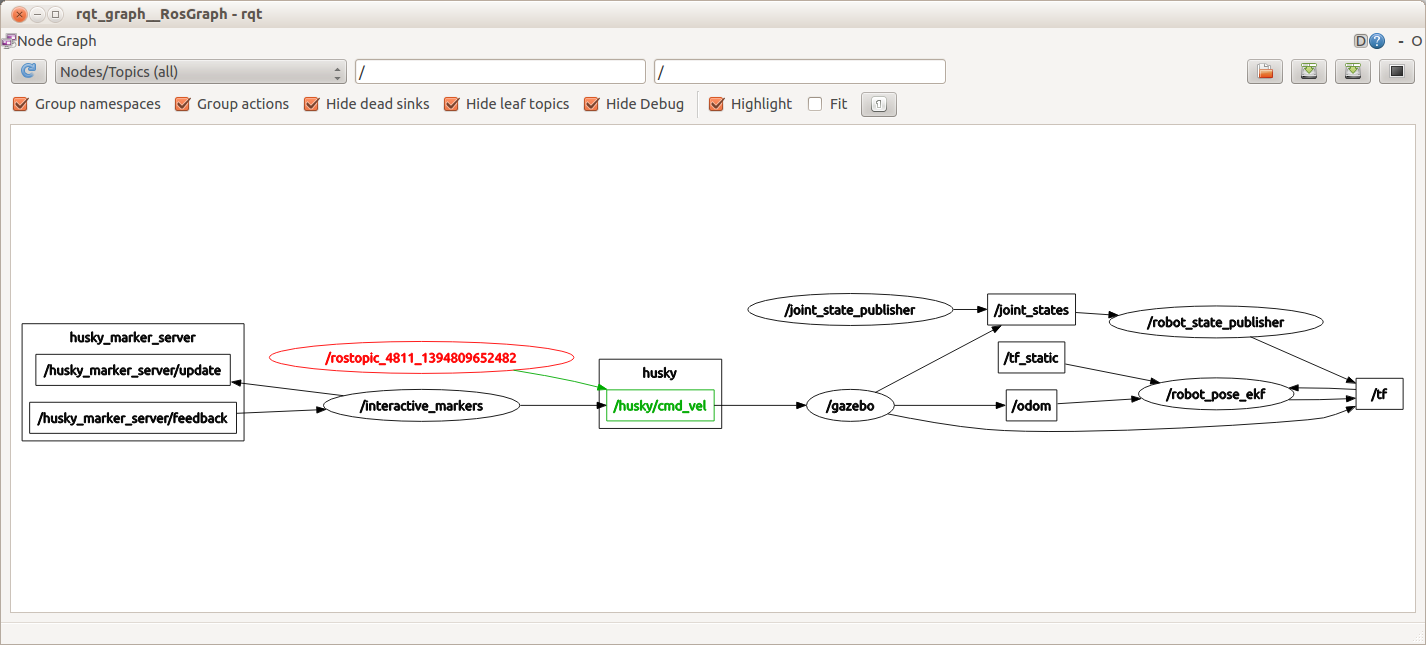

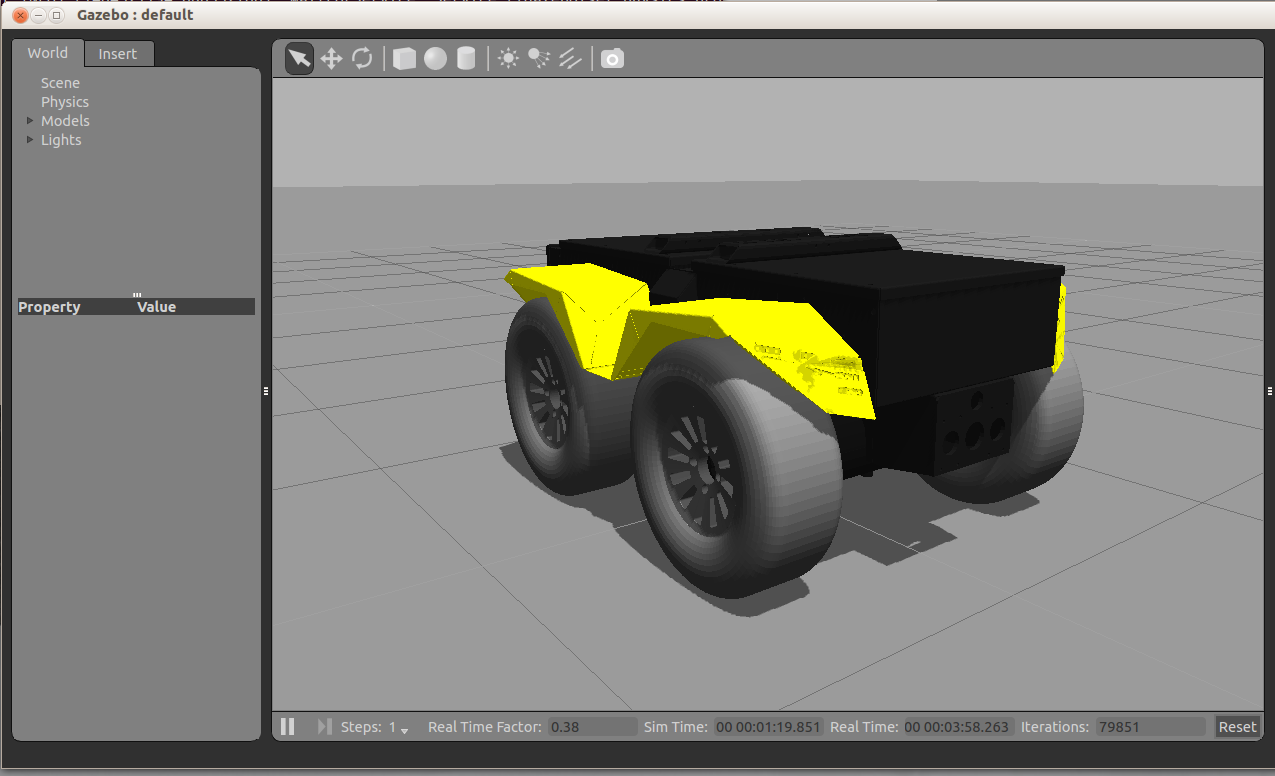

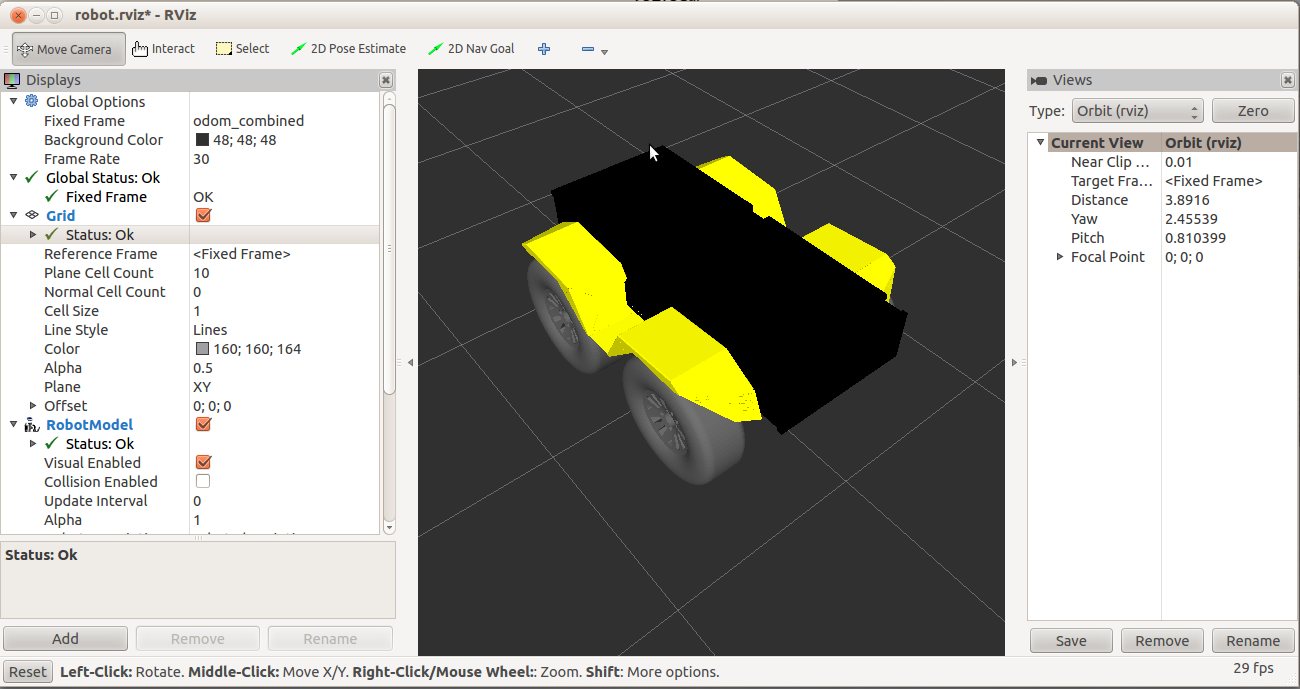

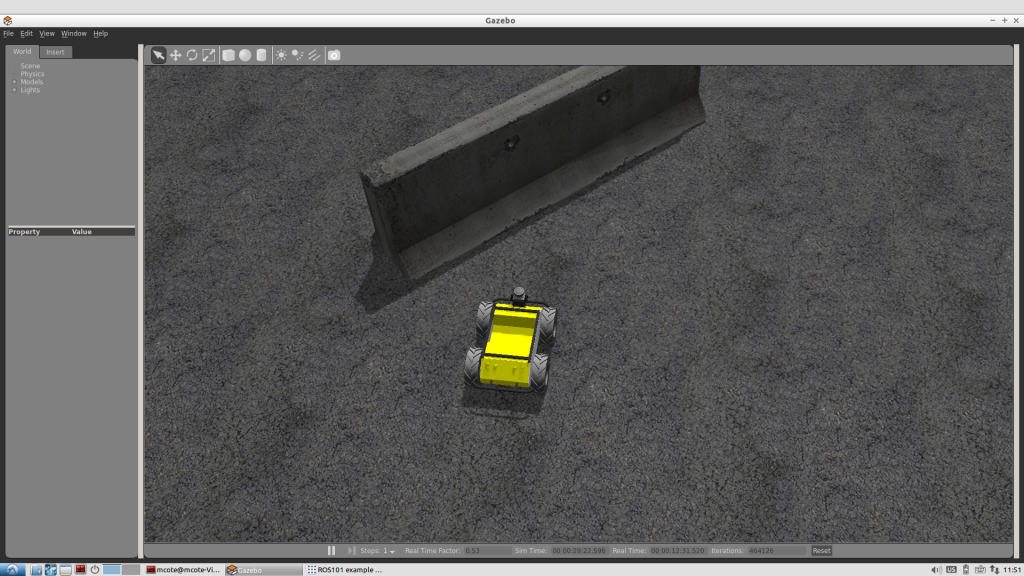

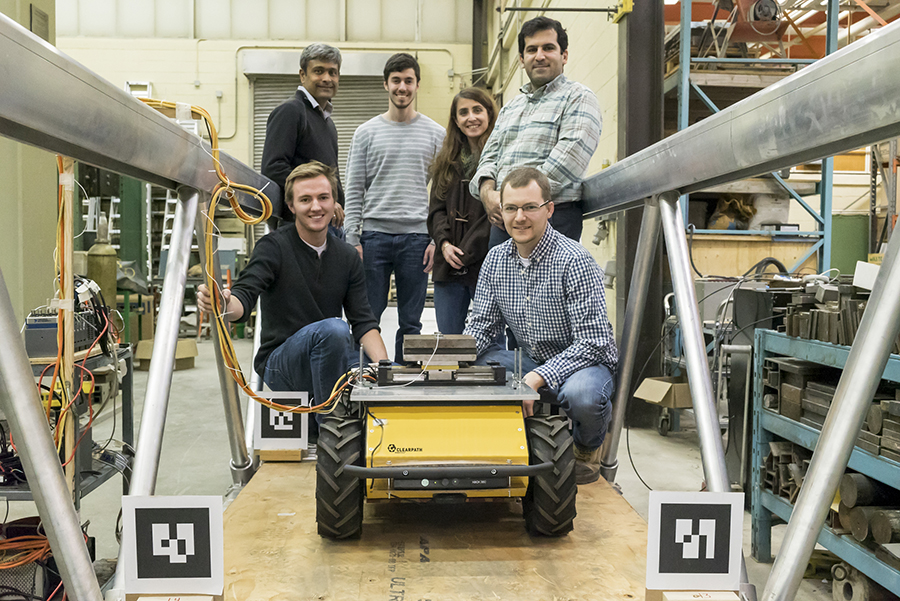

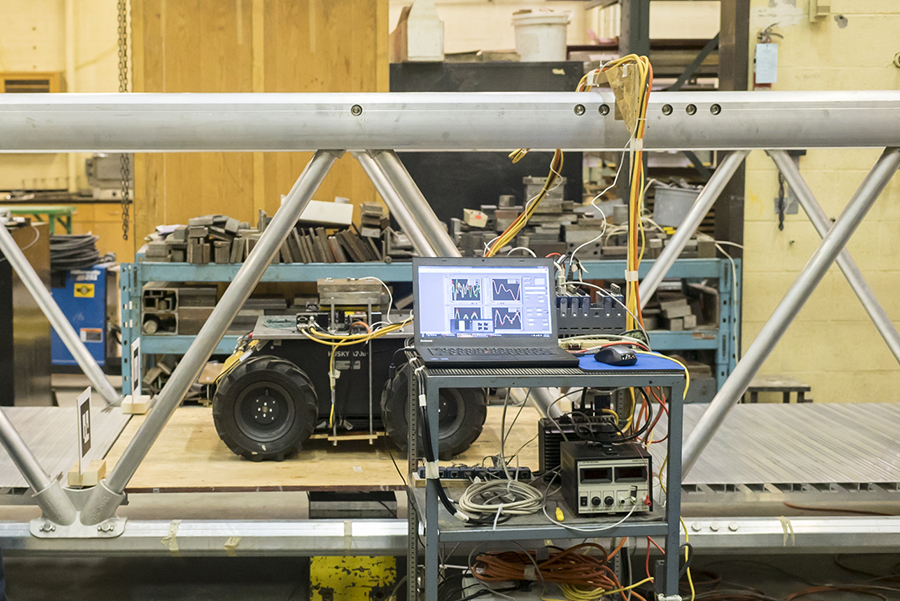

There is a new Israeli start-up called FFRobotics (Fresh Fruit Robotics or FFR). Avi, who is the CEO and Co-Founder, approached us a few years ago after hearing one of my students give a talk on optimization of a tasked-based harvesting manipulator. FFR are building a simple robotic arm – a three degree of freedom Cartesian robot, with the goal of having 8 or 12 of these arms picking apples (or other fruits) simultaneously. We were helping them with the arm’s optimization. We started collaborating and then a few months ago, Microsoft approached us and asked us to exhibit at their Think Next exhibition that they have every year. So we decided to put one of the arms on our Grizzly RUV robot which fit pretty nicely. We brought those to the demo, along with a few Jackals and had a pretty good time!

Tell me a bit about how the robot works and its components.

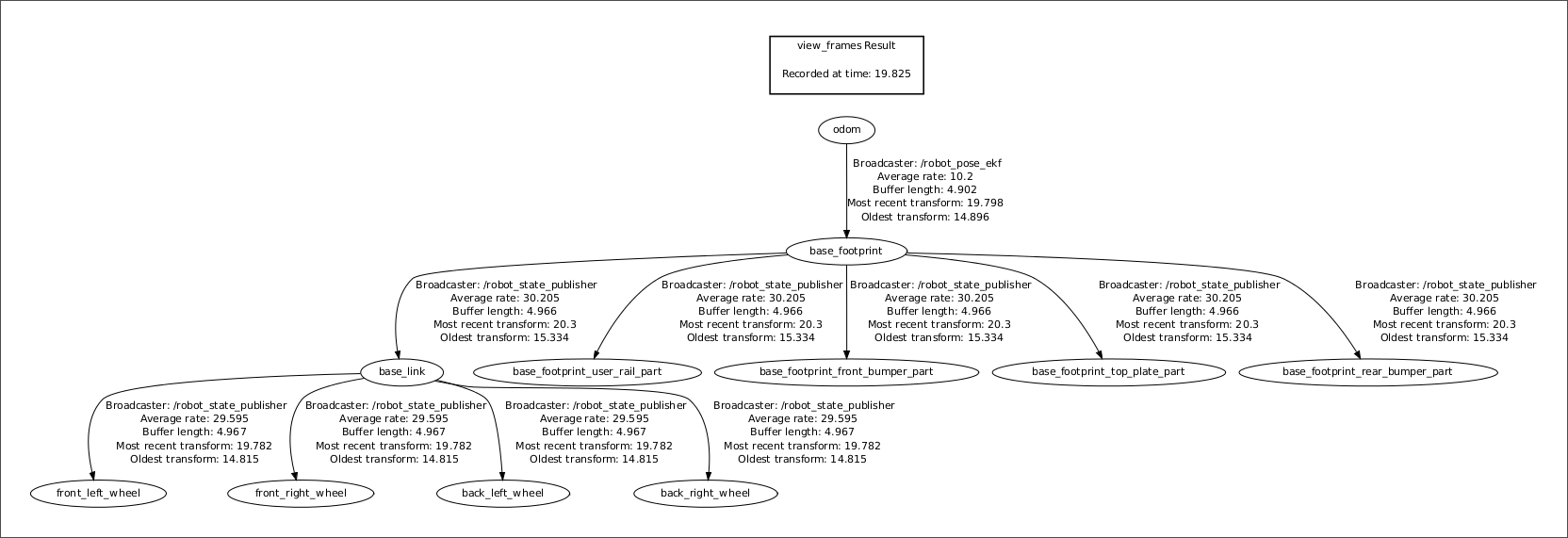

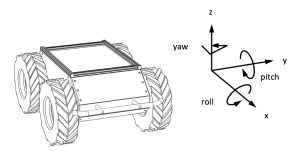

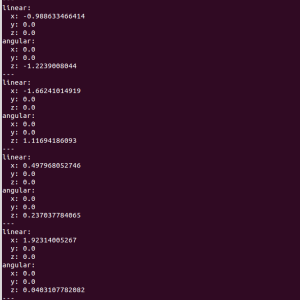

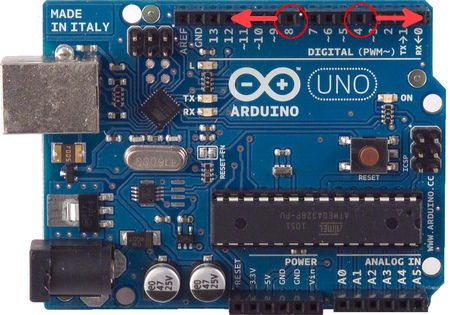

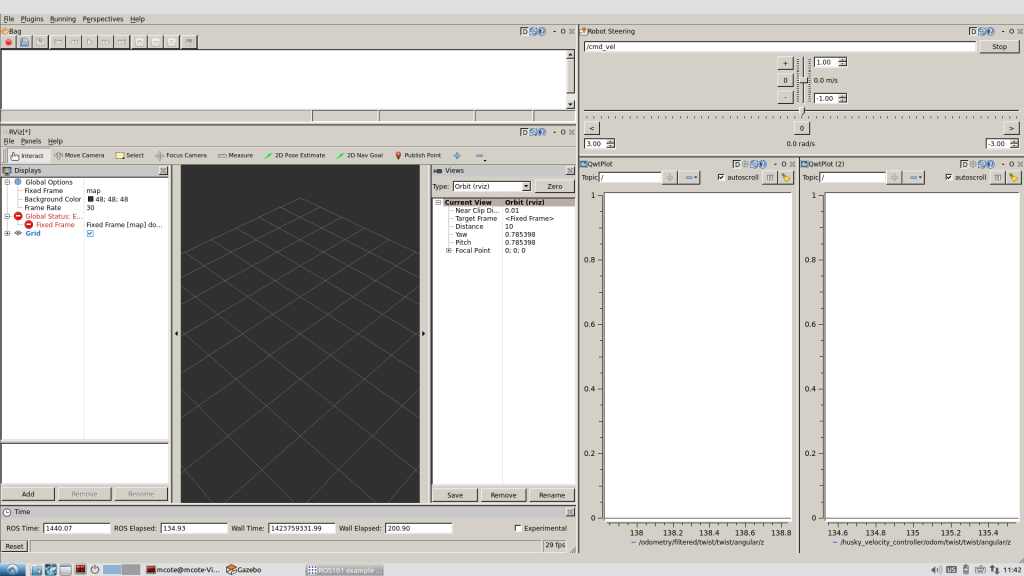

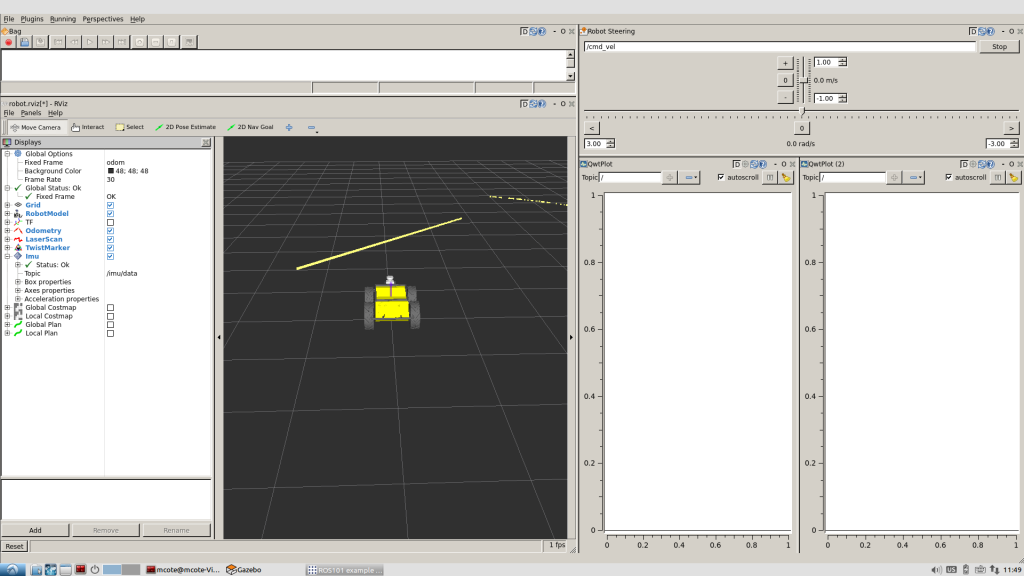

AD: So the FFR arm has a vision system to segment and detect the apples. After it finds an apple, it gives the controller the XYZ location and the arm then reaches for the fruit. It has an underactuated gripper at the end with a single motor which grips the apple and rotates it about 90 degrees to detach it. The arm doesn’t go back to the home position, it just retracts a bit, lets go and the apples go into a container. In our lab, we are concentrating right now on the automation of the mobile robots themselves – on the Grizzly, Husky, and Jackals – we have a few of your robots. Fruit Fresh Robotics is working primarily on the design of the manipulator.

What kind of vision sensors are being used?

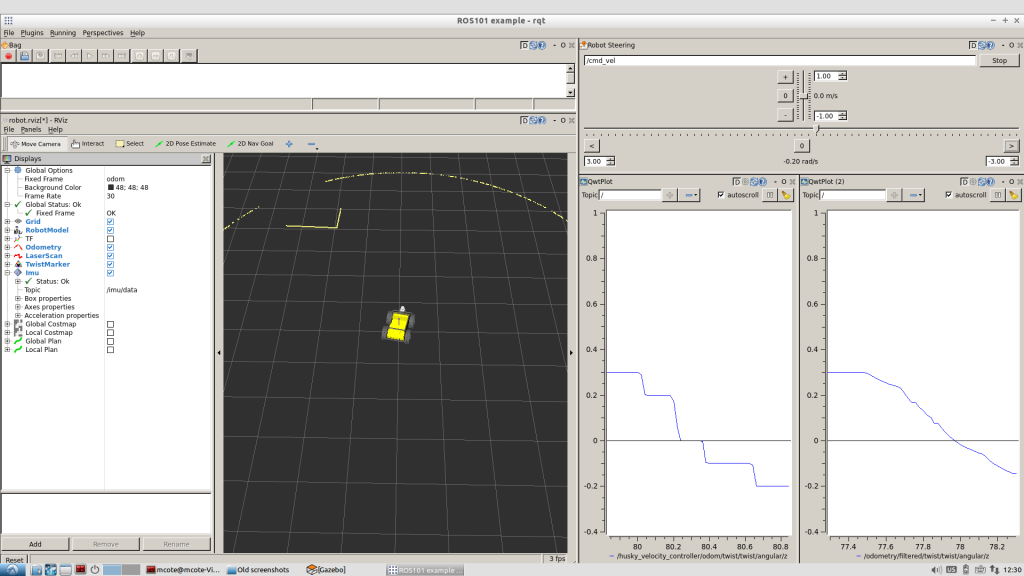

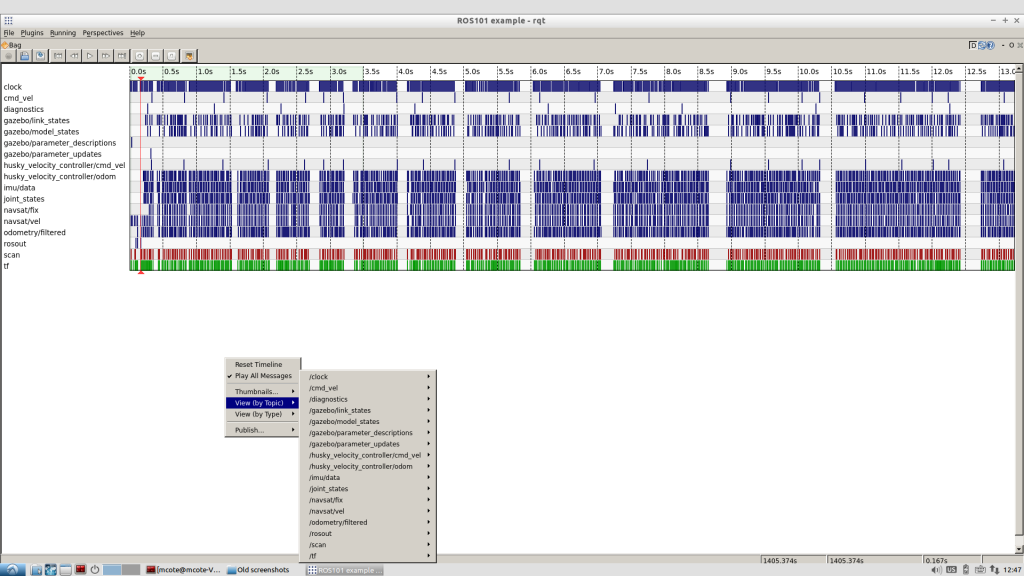

AD: On the Grizzly, we have an IMU, SICK LIDAR in Front, a stereo camera on a Pant-Tilt unit, and a dGPS. The arm uses a Kinect to do the sensing, but FFR is also looking into time of flight sensors to provide better robustness in the field.

What would you say is the most challenging part of automating apple picking?

AD: I believe that the most difficult thing is making something cheap and robust. I think the best way is to not only think of the robot but also to train the trees to be simpler. Now this has been done in the past decade or two to simplify human harvesting. In extreme cases, you can see fruiting walls that are nearly planar. They did this to make it a bit simpler for human harvesters. This is even more important for robotic harvesting. Trying to get a robot to harvest apples on a totally natural tree is extremely complex, and you will most likely need a 6-7 degree of freedom robot to do it. In terms of vision, perception will be very difficult. People have tried it in the past and it just doesn’t make sense and makes everything too expensive for farmers to use.

By taking simpler trees, ones that were trained and pruned as the ones in our collaboration with Fresh Fruit Robotics, you can actually use a three degree of freedom robot – the simplest Cartesian robot – to do the picking. But, I think you can even go further to make the tree in a more optimal shape for a robot, let’s say a quarter of a circle. This may not be good for a human, but might be perfect for a robot and perhaps will allow us to use simpler robots that only need two degrees of freedom. So, making the system robust while keeping costs down is the hardest part and in order to do that you have to work on the tree as well.

How exactly do you train a tree?

AD: Training systems such as a fruiting-wall require high density planting while ensuring that the trunk and branches are not too thick. In order to do that you have to support them with a trellis and other engineered support system. You want to make them optimal so that all the energy and water goes mostly to the fruit and not the tree itself. This has been done for a while now, and we are essentially piggy backing on that. The simplification of trees may be even more important for robotics than for humans, if we want robots to go into fruit picking and harvesting.

Can the robots autonomously navigate and patrol the apple orchards?

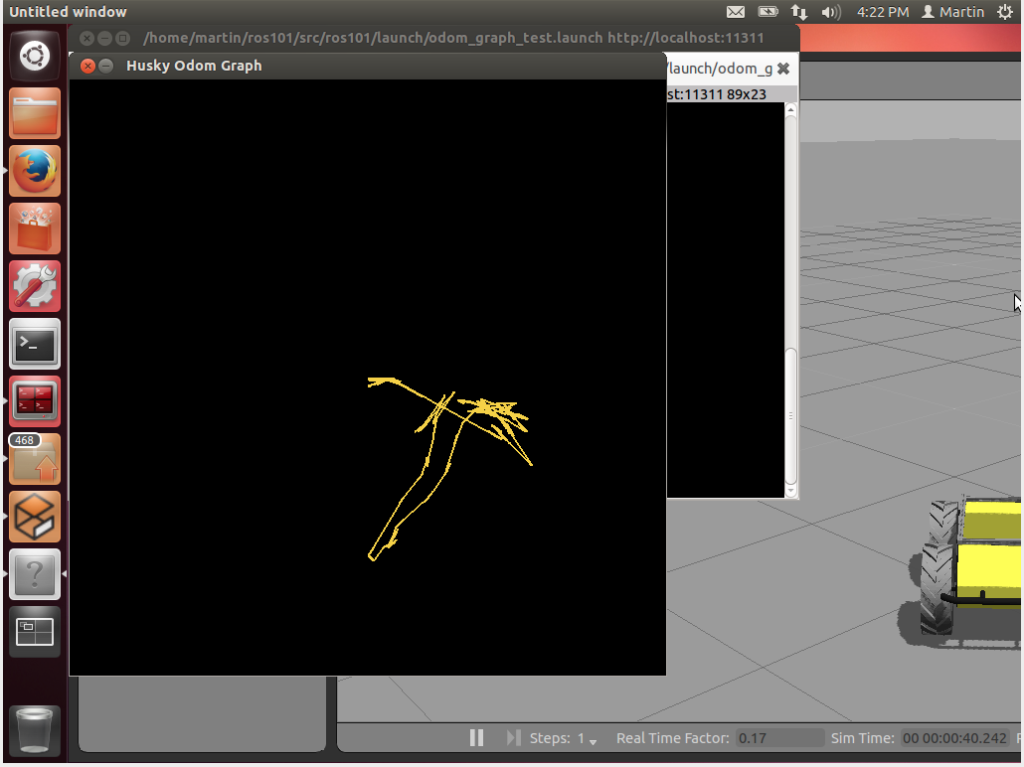

AD: Not at the moment. We are in the process of doing it now on all of our robots. Right now we are trying to do full SLAM on an orchard in order to do patrolling for harvesting. This is the goal we are aiming for this summer.

How do you compensate for weather effects?

AD: In Israel the weather is relatively mild, so the problem is usually with sun and wind rather than snow and rain. The main problem the weather creates is with the perception of the robot, having to compensate for changes in light and in position of the objects. To overcome this FFR uses a cover, like a tent, to shield the tree and the robot. If it’s windy, you have to use fast closed-loop control because if the target starts moving after it’s been perceived, the system has to keep on tracking it in order for the gripper to accurately grip the object where it is and not where it was 10 seconds ago.

Why did you choose the Grizzly RUV for this project?

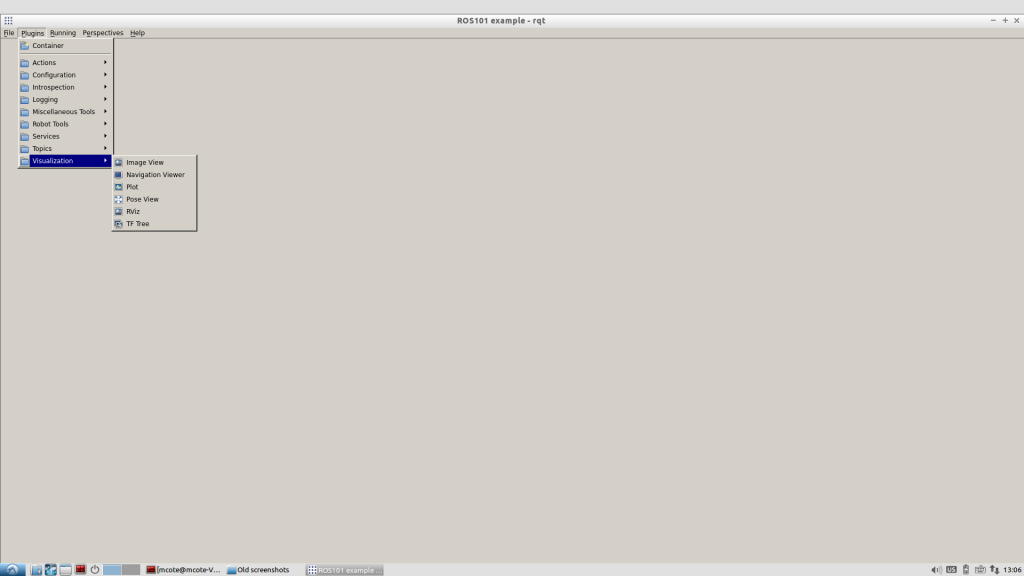

AD: We’ve had the Husky UGV for a while. We also have some older robots from 10 years ago, such as a tractor that we modified to be semi-autonomous. But, none of these vehicles were strong enough to actually do real work while being easily controllable. We wanted to carry bins full of fruit which weigh more than half a ton and we didn’t have a robot that could do that. Also, I wanted to have something that my students could take and apply the algorithms to a real-sized robot that could work in the field. We started with a TurtleBot to learn ROS, and then moved to the Husky. Then I wanted something I could just scale up and actually move to the field. That’s what we’re doing right now. It’s pretty new, so we’re in the early stages of working with it.

How did you find the integration process?

AD: Mechanical integration was very easy. We have not yet fully completed the electrical integration – instead we have two separate controllers and two separate electrical systems. Later on it will be relatively easy for the electrical system to be plugged into the robot since it uses the same voltage more or less. But right now it is decoupled.

Are you at a point where you can quantify the efficiency and cost benefits of the robots compared manual picking?

AK: We designed the system to pick approximately 10,000 high quality fruits per hour – that is without damaging the fruit while picking. Assuming working during the day only, the robot could potentially save farmers 25% in harvesting costs. It is important to understand that the same system will be able to pick other fresh fruits by replacing the end effector (gripper) and the software module. This option to harvest multiple fruits types increases the efficiency of the system dramatically.

What was it like to demo the robot to Satya Nadella, CEO of Microsoft, at the Think Next conference?

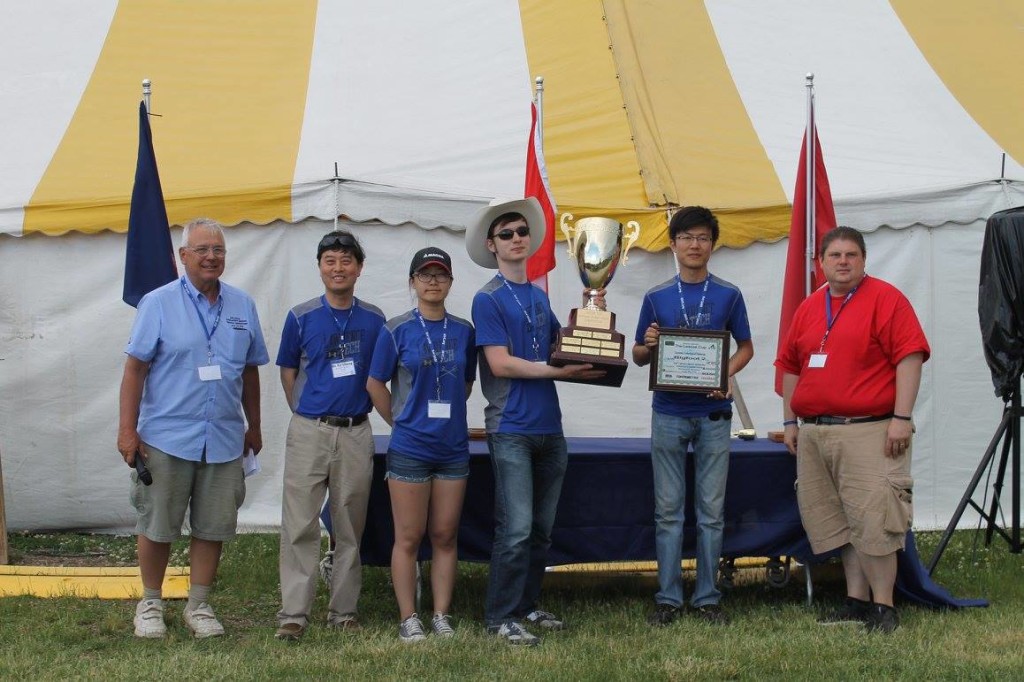

AD: It was exciting! He didn’t have a lot of time to spend at our booth. But it was an exciting exhibition with many demonstrators. We had the biggest robot for sure. It was fun! The FFR arm picked apples from the tree, and dropped them in the Jackal. Then, we drove the Jackal around delivering picked apples to people and they liked it. For safety reasons, we didn’t move the Grizzly. We just parked it and it didn’t move a centimeter the whole time. It was fun bringing such a big robot to the demo.

How close are you to a commercial release?

AK: Following last year’s field tests we believe the commercial system is about two years ahead. During the summer of 2016 we plan to test a full integrated system in the apple orchard, picking fruits from the trees all the way to the fruit bin. The first commercial system will be available for the 2017 apple picking season.

What is next for CEAR lab?

AD: We will continue working on the theoretical part of the optimization of the robotic arms. We’re looking into new ideas on re-configurability of arms – having arms doing different tasks and pretty easily switching from one task to another. With the Grizzly, we’re working on autonomous navigation of the apple orchards and are also working on a non-agricultural project related to search and rescue. We designed a suspension system on the Grizzly and mounted a stretcher on top of it. The motivation is to be able to evacuate wounded from harm’s way autonomously in rough terrain.

We are pretty happy with our robots. It’s a big family! We pretty much have all of Clearpath’s robots. Technical support is great and it’s been fun!

The post Interview: The Team Behind The Apple Harvesting Robot appeared first on Clearpath Robotics.

by Nicholas Charron

by Nicholas Charron