By Ryan Gariepy

For our recent “hack week” we teamed up with one of the most innovative visual technology companies in the world, Christie, to make a 3D video game with robots. How did it all come together?

As seems to be the norm, let’s start with the awesome:

Inspiration

In late October, a video from MIT was making the rounds around at Clearpath. Since we usually have a lot of robots on hand and like doing interesting demos, we reached out to Shayegan to see if we could get some background information. He provided some insights into the challenges he faced when implementing this, and I was convinced that our team could pull a similar demo together so we could see how it worked in person.

Here’s what MIT’s setup looked like:

(Video: Melanie Gonick, MIT News. Additional footage and computer animations: Shayegan Omidshafiei)

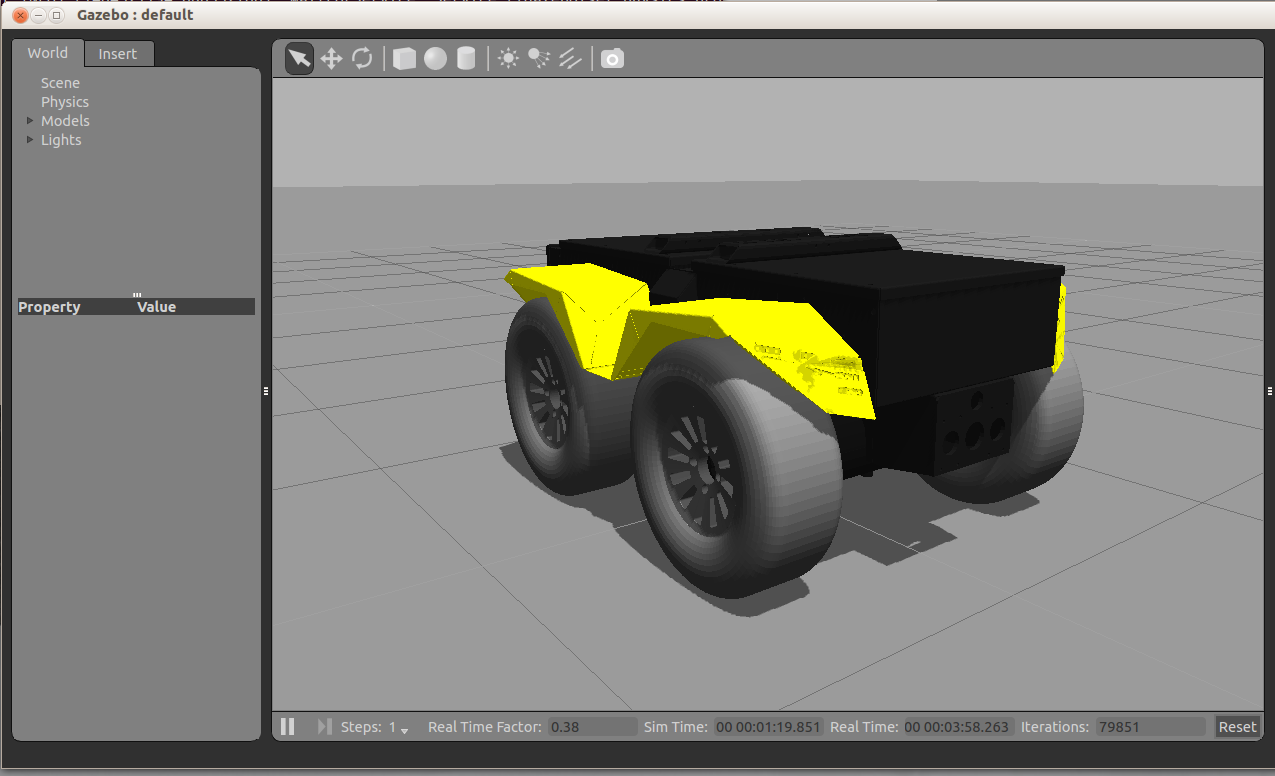

At the most fundamental, this demo needs:

- A small fleet of robots

- A way for those robots to know where they are in the world

- A computer to bring all of their information together and render a scene

- A method of projecting the scene in a way which is aligned with the same world the robots use

In MIT’s case, they have a combination of iRobot Creates and quadrotors as their robot fleet, a VICON motion capture system for determining robot location, a Windows computer running ROS in a virtual machine as well as projector edge-blending software, and a set of 6 projectors for the lab.

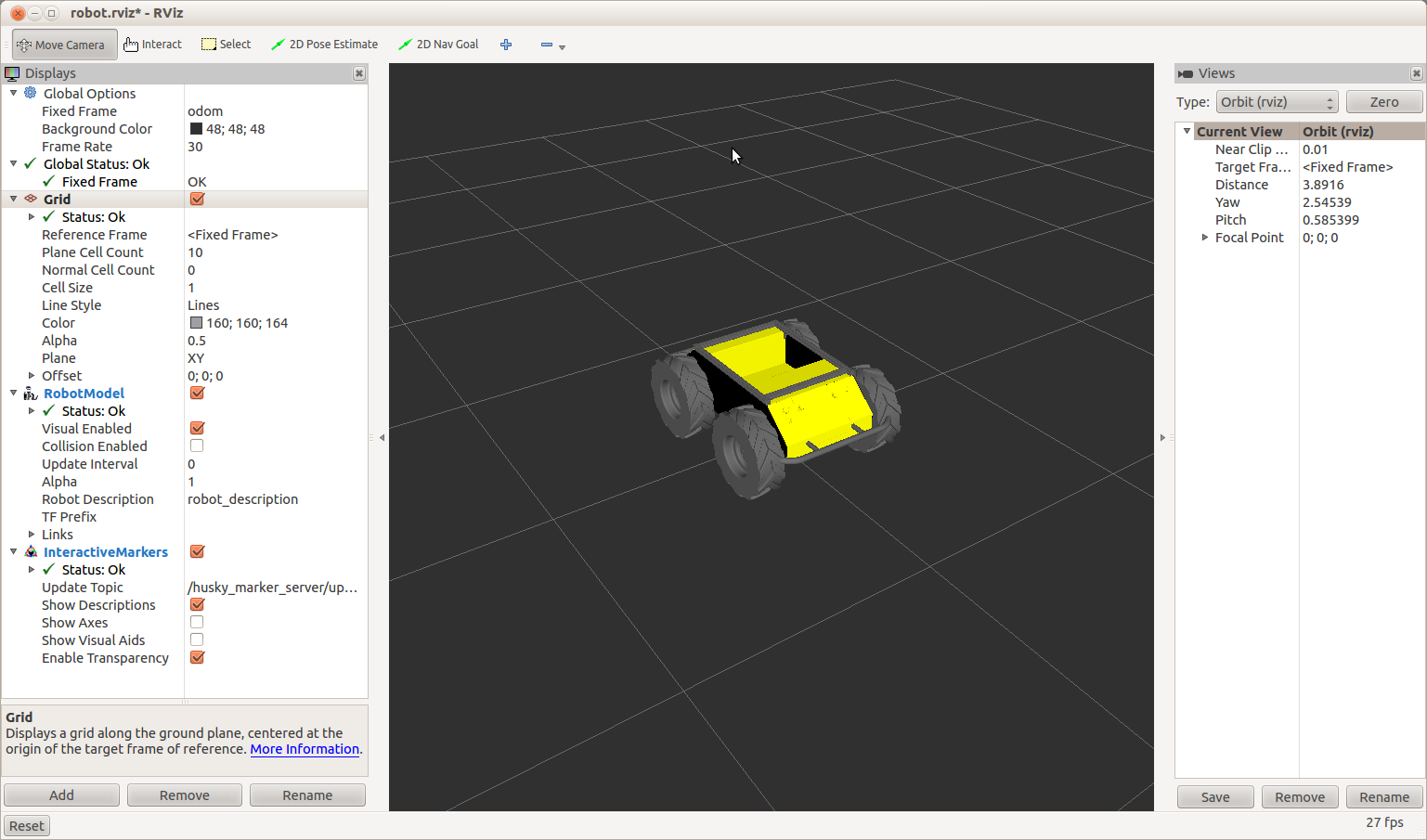

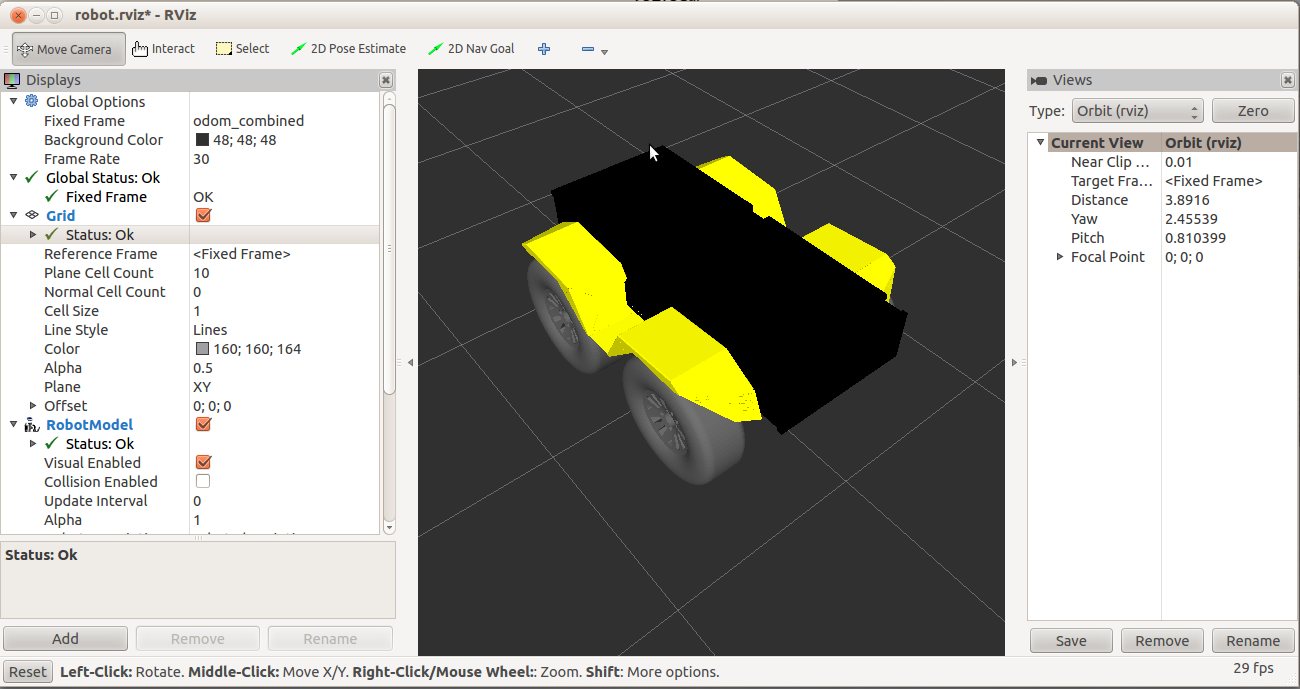

There were three things we wanted to improve on for our demo. First, we wanted to run all-ROS, and use RViz for visualizations. That removes the performance hit from running RViz in a VM, and also means that any visualization plugins we came up with could be used anywhere Clearpath uses ROS. Second, we wanted to avoid using the VICON system. Though we have a VICON system on hand in our lab and are big fans, we were already using it for some long-term navigation characterization at the time, so it wasn’t available to us. Finally, we wanted to make the demo more interactive.

Improvement #1: All-ROS, all the time!

To get this taken care of, we needed a way to either run edge-blending software on Linux, or to use projectors that did the edge blending themselves. Fortunately, something that might be a little known fact to our regular audience is that Christie is about 10 minutes away from Clearpath HQ and they make some of the best digital projectors in the world that, yes, do edge blending and more. A few emails back and forth, and they were in!

For this project, Christie arrived with four Christie HD14K-M 14,000 lumens DLP® projectors and two cameras. The projectors use Christie AutoCal software and have Christie Twist

software and have Christie Twist software embedded right in. Christie rigged the four projectors in a 2 x 2 configuration on the ceiling of our warehouse. The cameras captured what was happening on the floor and sent that information on the Christie AutoCal

software embedded right in. Christie rigged the four projectors in a 2 x 2 configuration on the ceiling of our warehouse. The cameras captured what was happening on the floor and sent that information on the Christie AutoCal software, which then automatically aligned and blended the four projectors into one giant seamless 30 foot projection mapped digital campus.

software, which then automatically aligned and blended the four projectors into one giant seamless 30 foot projection mapped digital campus.

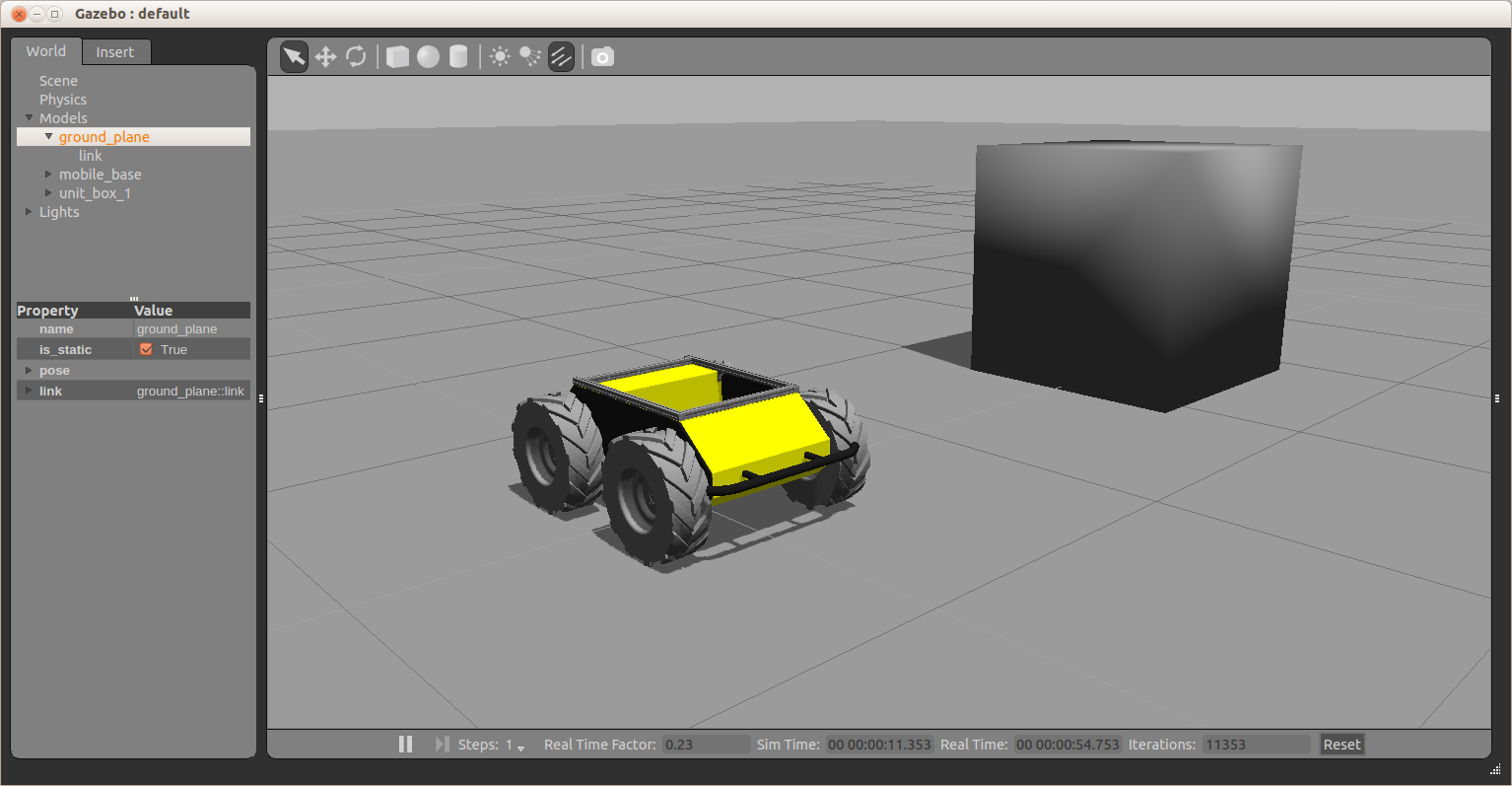

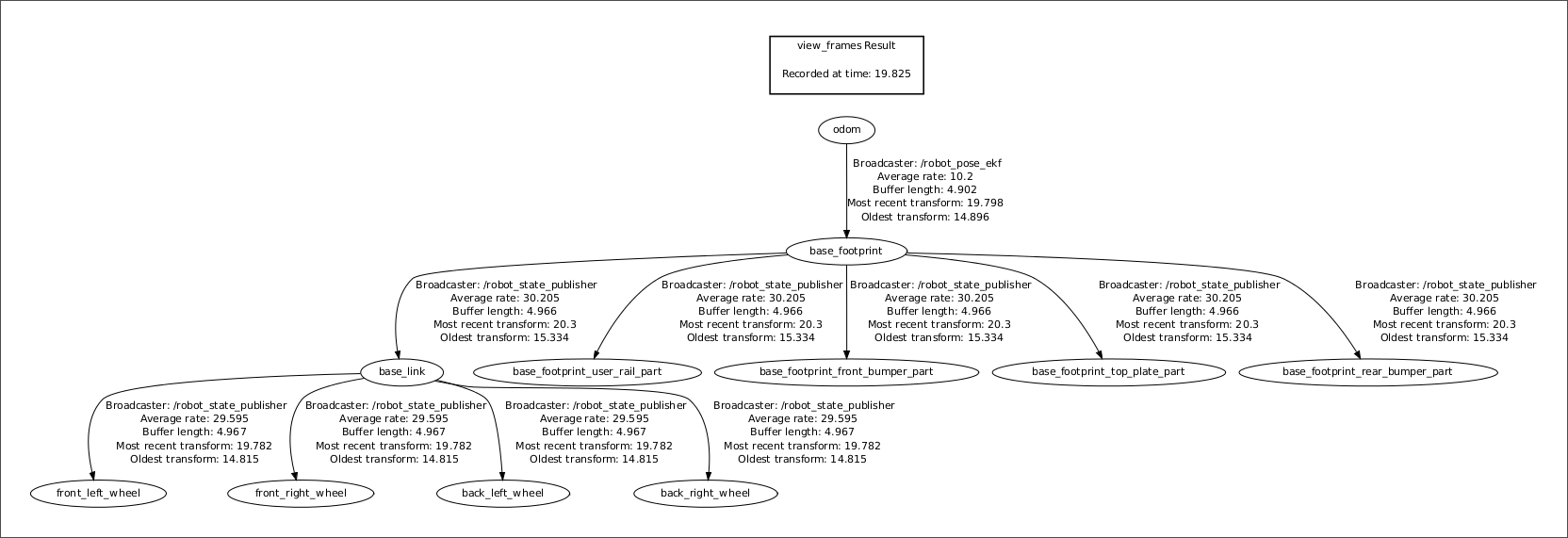

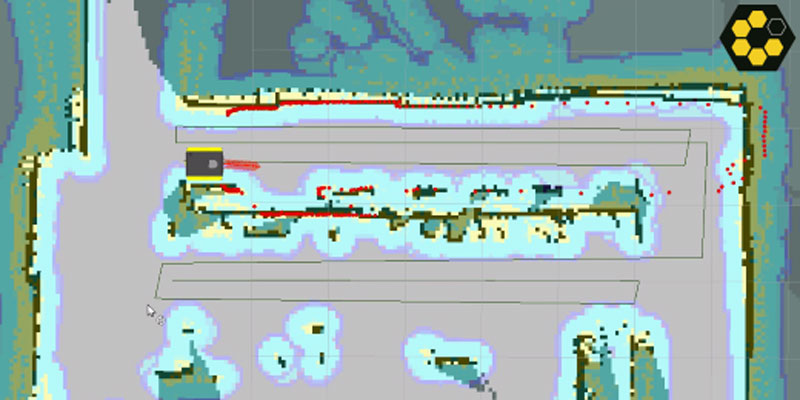

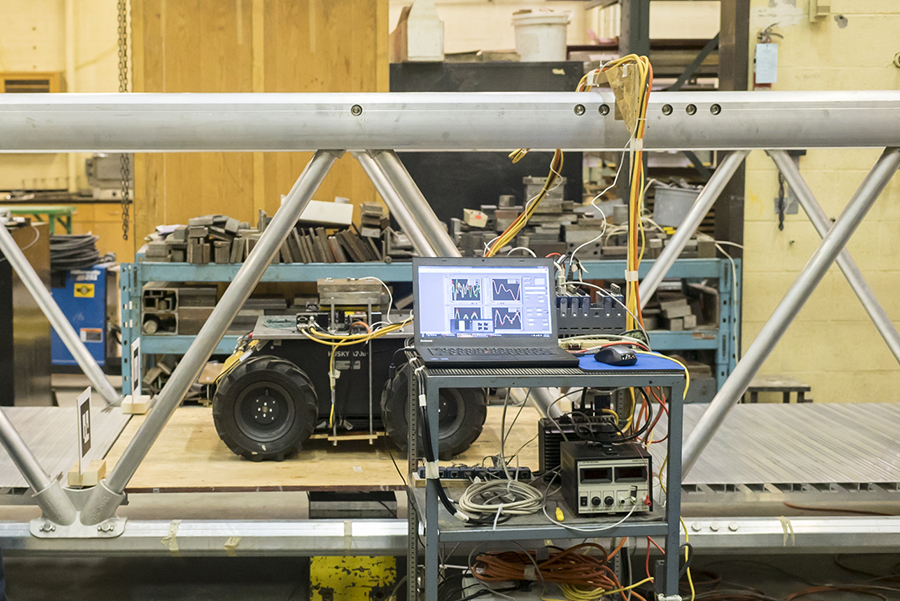

Improvement #2: No motion capture

Getting rid of the motion capture system was even easier. We already have localization and mapping software for our robots and the Jackals we had on hand already had LIDARs mounted. It was a relatively simple matter to map out the world we’d operate in and share the map between the two robots.

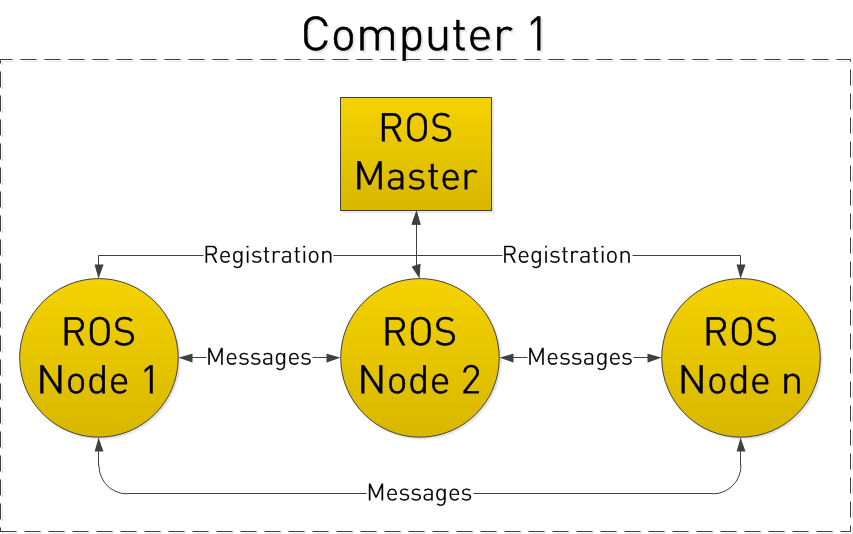

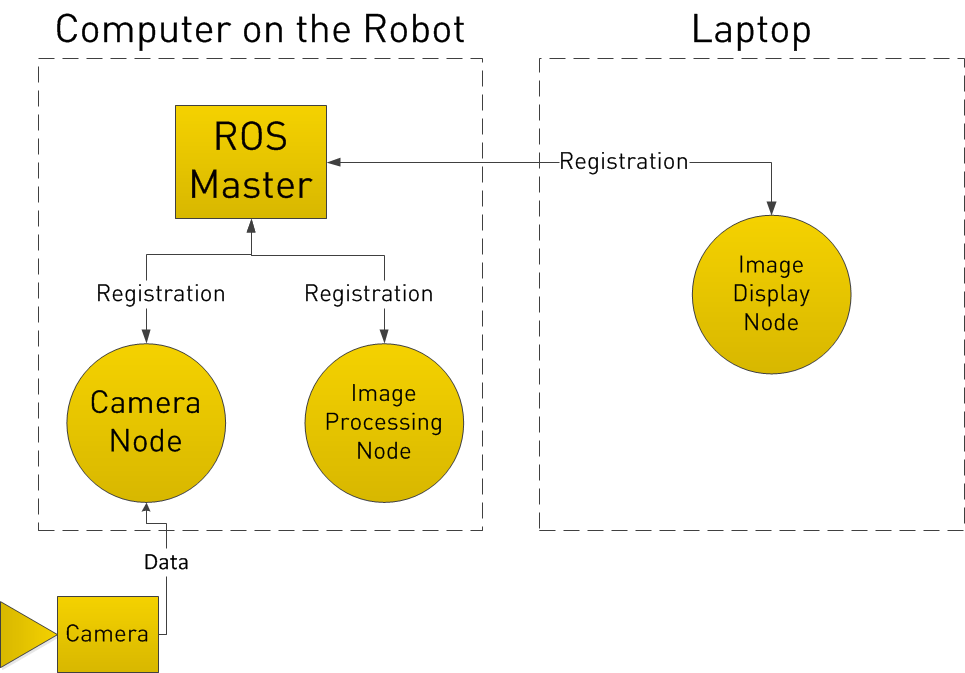

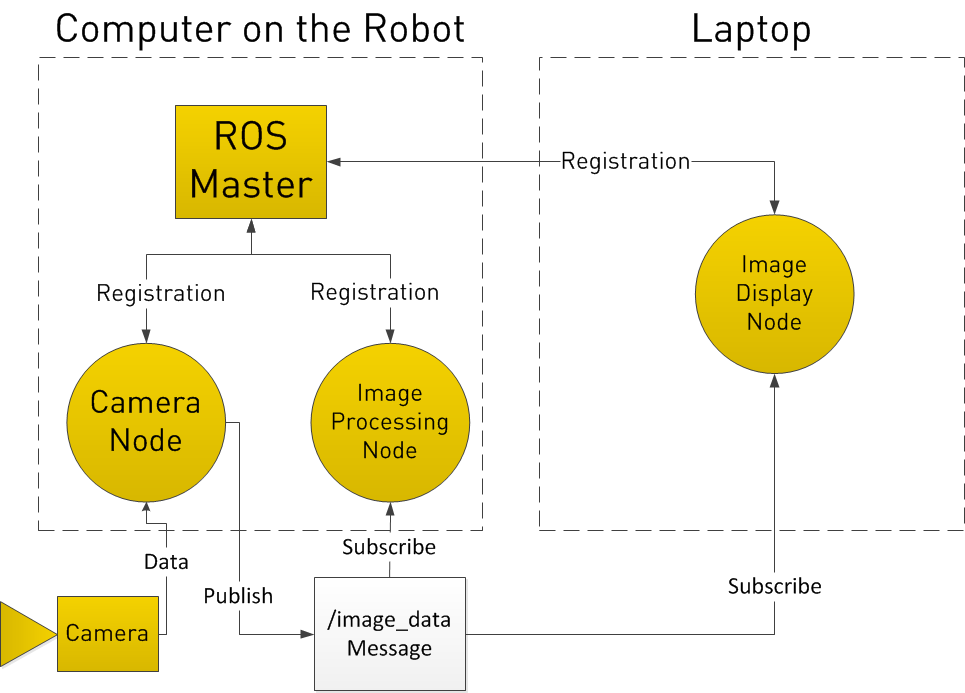

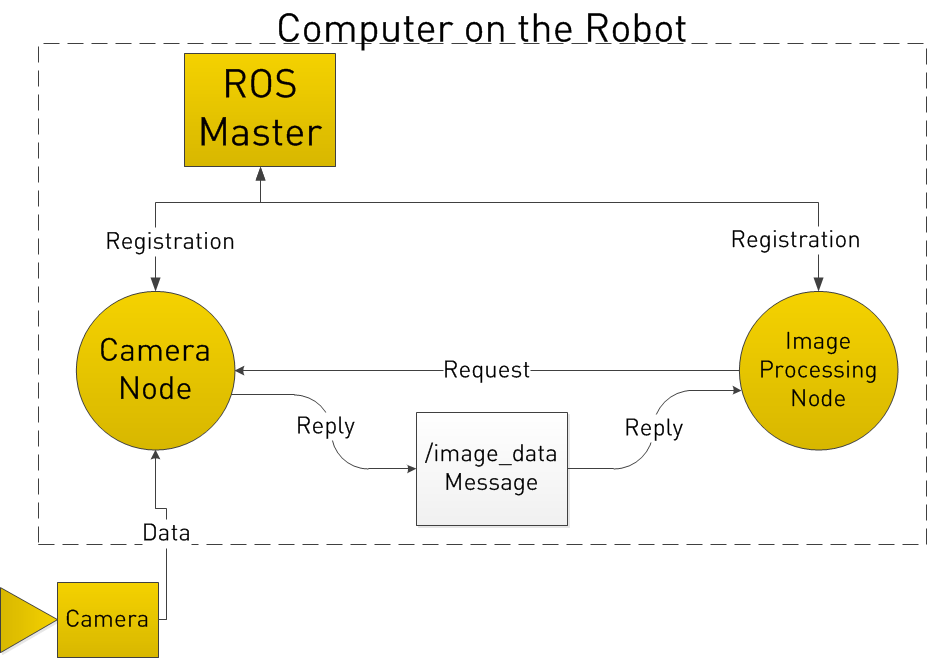

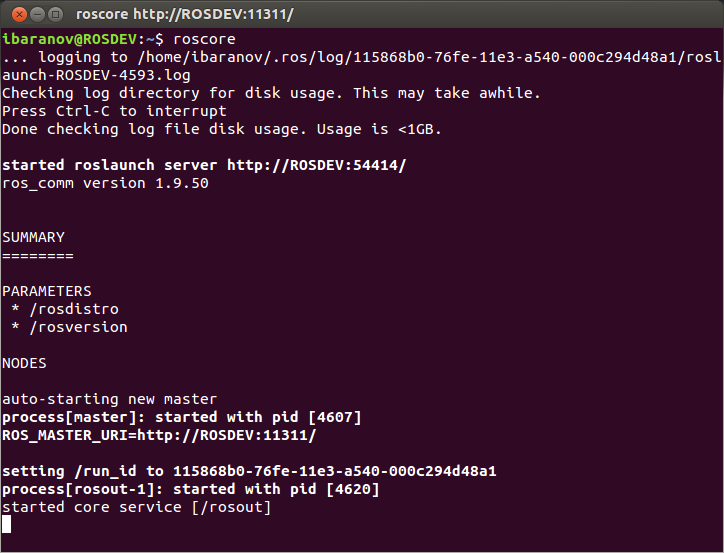

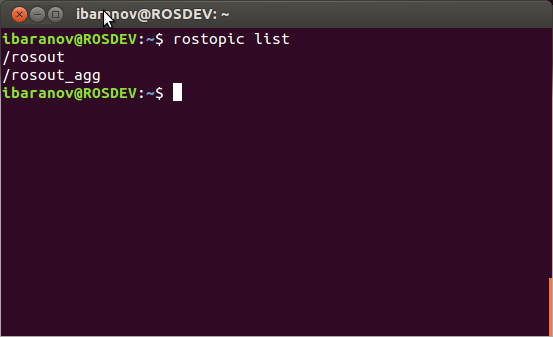

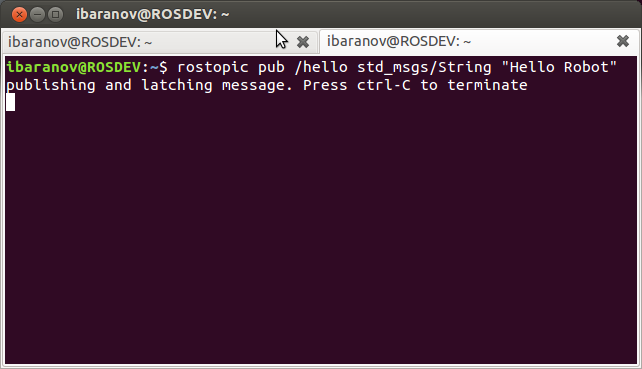

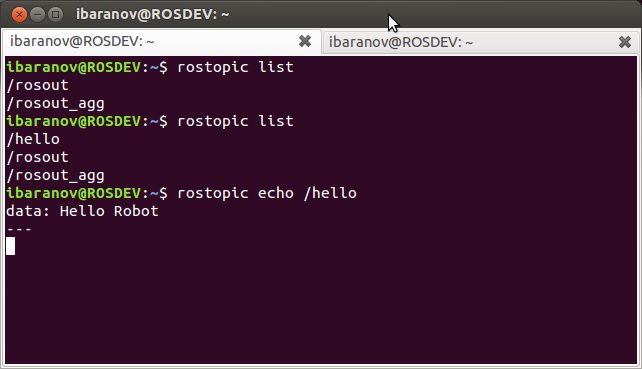

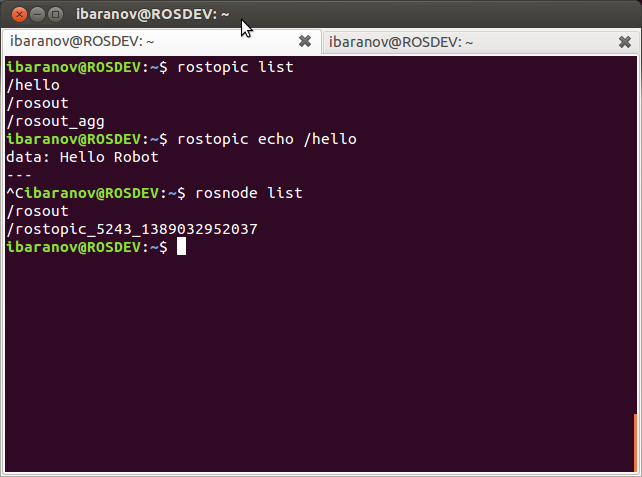

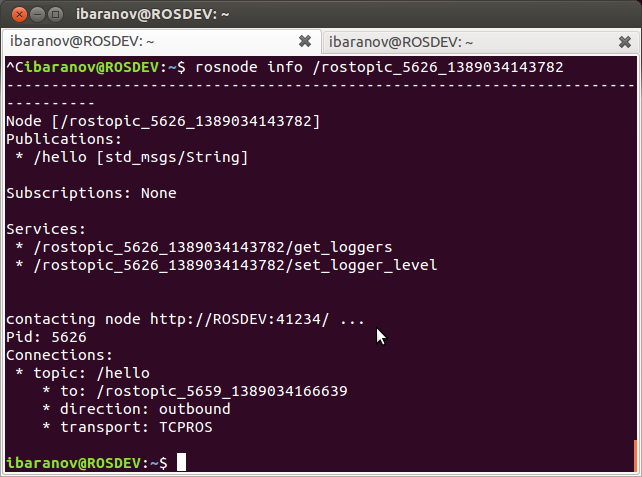

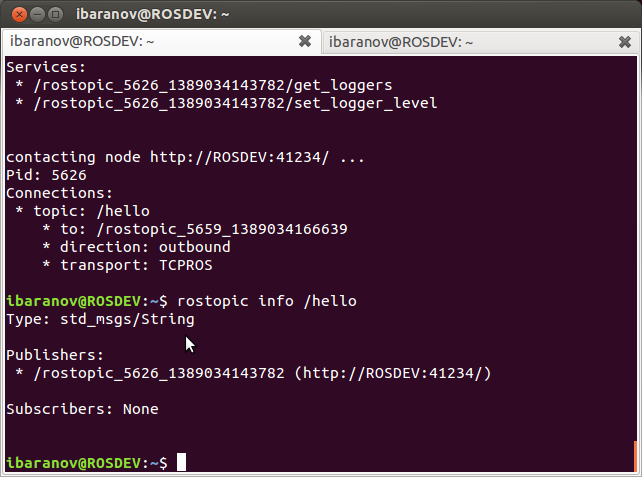

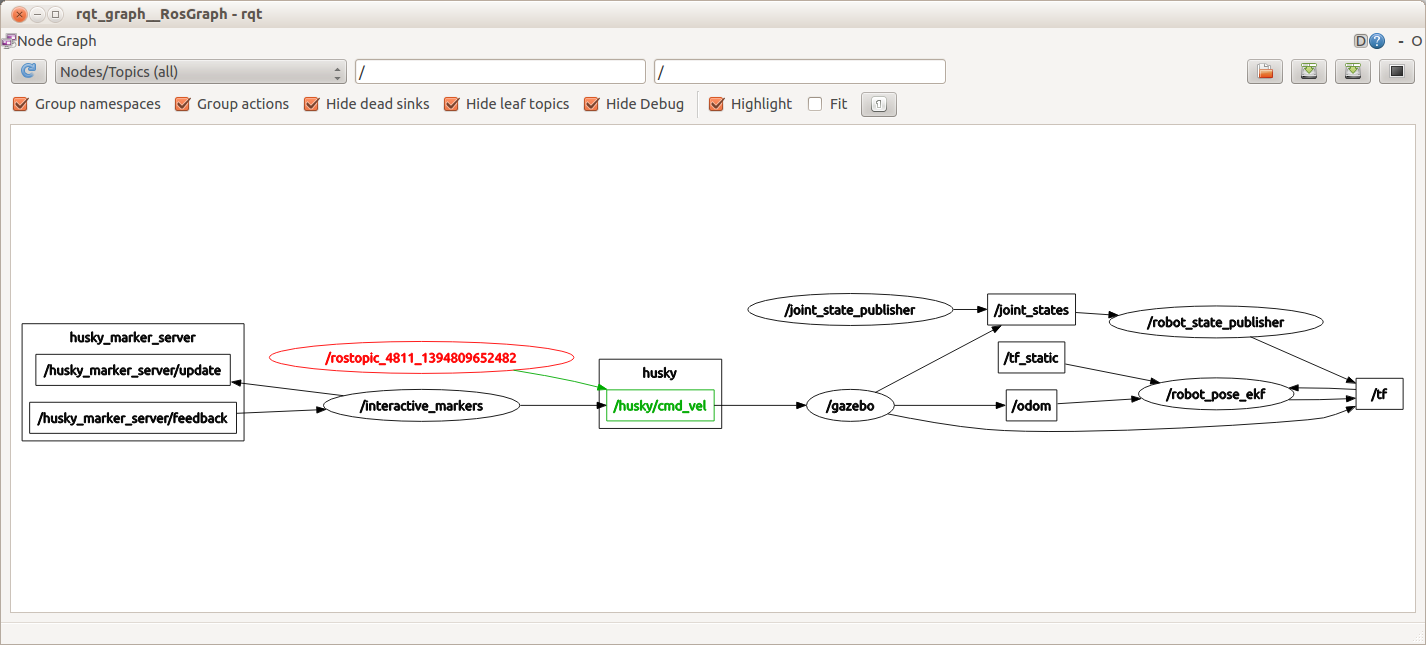

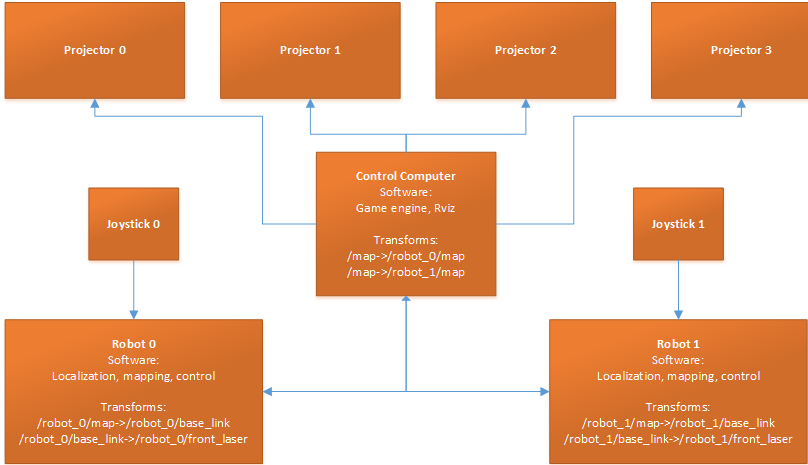

Now, I will make a slight aside here… Multi-robot operation in ROS is still not yet what one would call smooth. There are a few good solutions, but not one that is clearly “the one” to use. Since all of our work here had to fit into a week, we took the quick way out. We configured all of the robots to talk to a single ROS Master running on the computer connected to the computers, and used namespaces to ensure the data for each robot stayed tied to each robot. The resulting architecture was as follows:

All we had to do to sync the robot position and the projector position was to start training the map from a marked (0,0,0) point on the floor.

Improvement #3: More interactivity

This was the fun part. We had two robots and everyone loves video games, so we wrote a new package that uses Python (via rospy), GDAL, and Shapely to create a real-life PvP game with our Jackals. Each Jackal was controlled by a person and had the usual features we all expect from video games – weapons, recharging shields, hitpoints, and sound effects. All of the data was rendered and projected in real-time along with our robots’ understanding of their environment.

And, as a final bonus, we used our existing path planning code to create a entire “AI” for the robots. Since the robots already know where they are and how to plan paths, this part was done in literally minutes.

The real question

How do I get one for myself?

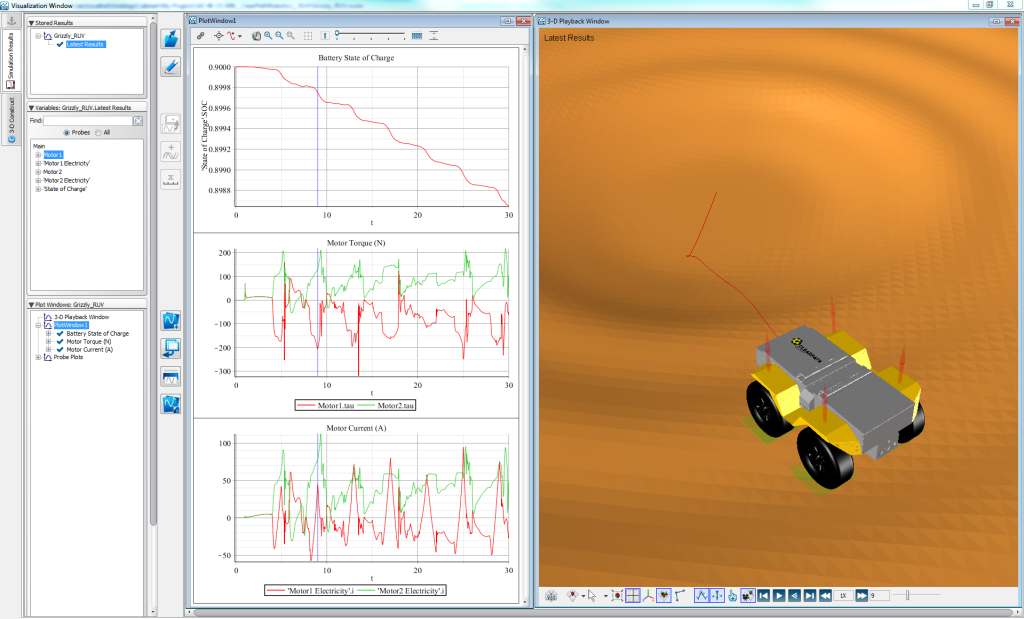

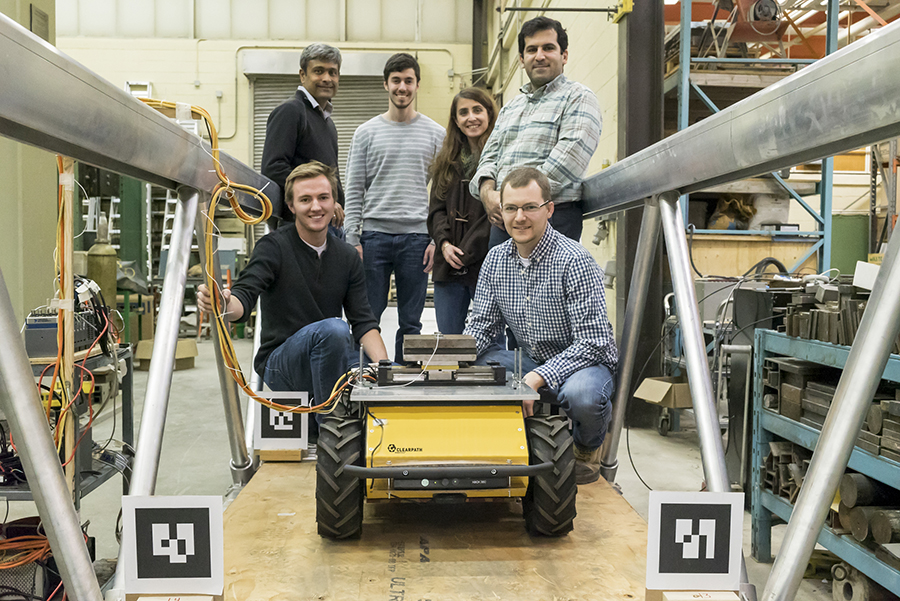

Robots: Obviously, we sell these. I’d personally like to see this redone with Grizzlies.

Projectors: I’m sure there are open-source options or other prosumer options similar to how MIT did it, but if you want it done really well, Christie will be happy to help.

Software: There is an experimental RViz branch here which enables four extra output windows from RViz.

The majority of the on-robot software is either standard with the Jackal or is slightly modified to accommodate the multi-robot situation (and can also be found at our Jackal github repository). We intend on contributing our RViz plugins back, but they too are a little messy. Fortunately, there’s a good general tutorial here on creating new plugins.

The game itself is very messy code, so we’re still keeping it hidden for now. Sorry (sad)

If you’re a large school or a research group, please get in touch directly and we’ll see how we can help.

Happy gaming!

Overview

Overview

Angle of incidence

Angle of incidence By Rachel Gould

By Rachel Gould

By Ilia Baranov

By Ilia Baranov

The New Year is upon us and with that comes predictions of what 2016 has in store. Will Automated Guided Vehicle (AGVs) continue to drive materials on the factory floor? What is ‘Industry 4.0’ and when will it take shape? The factory of the future is around the corner and these three supply chain trends for 2016 are the ones that will take us there.

The New Year is upon us and with that comes predictions of what 2016 has in store. Will Automated Guided Vehicle (AGVs) continue to drive materials on the factory floor? What is ‘Industry 4.0’ and when will it take shape? The factory of the future is around the corner and these three supply chain trends for 2016 are the ones that will take us there. In

In  A supply chain is a system of organizations, people, activities, information, and resources that work together to move a product, whereas a production chain is the steps that needs to take place in order to transform raw materials into finished goods. The supply chain trend of service chains becoming more important than product chains is something that will develop over the next 10 years, although it’s already becoming reality. Providing great, reliable products is a standard expectation in the marketplace; whereas service is often perceived as a ‘nice-to-have’ within manufacturing.

A supply chain is a system of organizations, people, activities, information, and resources that work together to move a product, whereas a production chain is the steps that needs to take place in order to transform raw materials into finished goods. The supply chain trend of service chains becoming more important than product chains is something that will develop over the next 10 years, although it’s already becoming reality. Providing great, reliable products is a standard expectation in the marketplace; whereas service is often perceived as a ‘nice-to-have’ within manufacturing.

by Nicholas Charron

by Nicholas Charron